Data Profiler – An Open Source Solution to Explain Your Data

Gain insight into large data with Data Profiler, an open source project from Capital One

In the field of machine learning, data is a valuable resource and understanding data is one of our most valuable capabilities. As data scientists and engineers, we need to be able to answer these questions with every data intensive project we build: Is our data secure? What does the data say? How should we use the data? At Capital One, we are proud to help answer these questions with the Data Profiler – an open source project from Capital One that performs sensitive data analysis on a wide range of data types.

Introducing the Data Profiler – an open source project from Capital One

Often data streams become so large that it becomes hard, or even impossible, to monitor all the data being sent through. If sensitive data slips through, it could be detrimental and difficult to stop or even notice. The goal of the Data Profiler open source project is to detect sensitive data and provide detailed statistics on large batches of data (distribution, pattern, type, etc.). This data, called “sensitive entities” is defined as any crucial private information like bank account numbers, credit card information, social security numbers, etc.

There are several key features of the Data Profiler, including detecting sensitive entities, generating statistics, and providing the infrastructure to build your own data labeler. For Data Profiler we’ve designed a pipeline that can accept a wide range of data formats. This includes csv, avro, parquet, json, text, and pandas DataFrames. Whether the data is structured or unstructured, the data can be profiled.

An example of how Data Profiler works

Maybe you’re a jeweler buying and selling diamonds with a large database of all your customers and transaction details. Imagine you have a simple structured dataset like this, except with many hundred more rows and columns:

The Data Profiler can help you learn from your data. Each column in your dataset will have been profiled individually to generate per column statistics. You’ll learn the exact distribution of the price of diamonds, that cut is a categorical column of several unique values, that the carat is organized in ascending order, and most importantly, you’ll learn the classification of each column for sensitive data.

Our machine learning model will then automatically classify columns as credit card information, email, etc. This will help you discover if sensitive data exists in columns they shouldn’t exist in.

Switching out Data Profiler’s machine learning model

The default Data Profiler machine learning model will recognize the following labels:

- BACKGROUND (Text)

- ADDRESS

- BAN (bank account number, 10-18 digits)

- CREDIT_CARD

- EMAIL_ADDRESS

- UUID

- HASH_OR_KEY (md5, sha1, sha256, random hash, etc.)

- IPV4

- IPV6

- MAC_ADDRESS

- PERSON

- PHONE_NUMBER

- SSN

- URL

- DATETIME

- INTEGER

- FLOAT

- QUANTITY

- ORDINAL

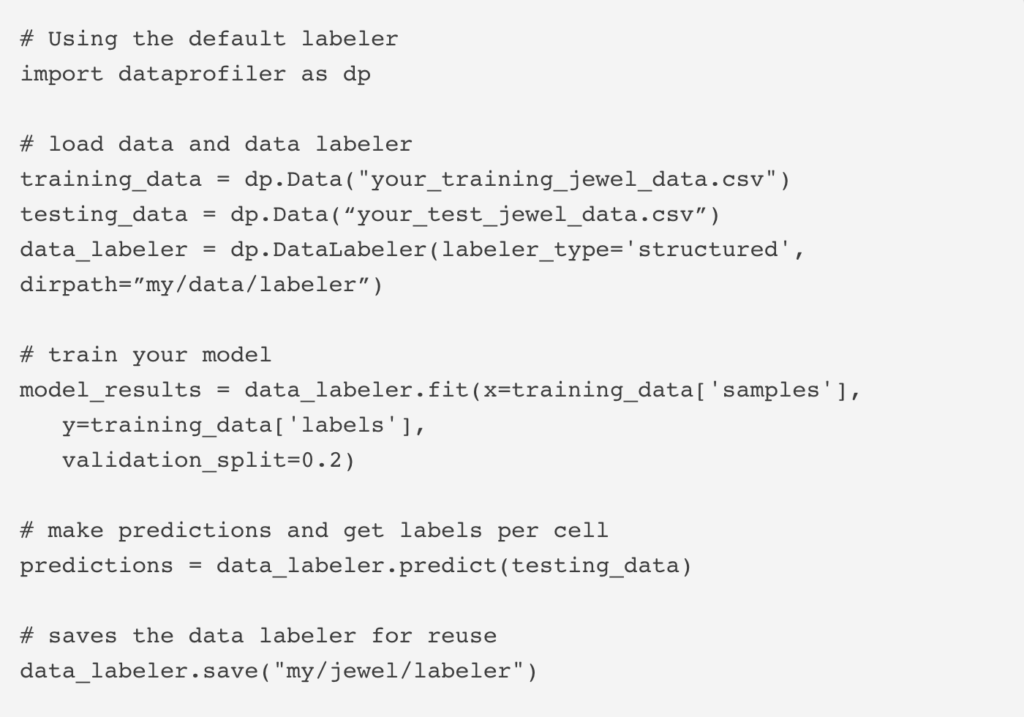

To use our jeweler example, perhaps you are regularly selling gems and need a model to identify specific gem types. Switching models in the data labeler is very simple as the data labeler is a pipeline that exists within the Data Profiler that can be altered to fit your needs.

Three components make up the data labeler: a preprocessor, a model, and a postprocessor.

The preprocessor is designed to take raw data and turn it into a format that the model can accept. The model is designed to take in data, run a prediction (or fit), and output the data to the postprocessor. The postprocessor takes the output and turns it into something usable by the user. One of the most important features of a data labeler is versatility. Meaning being able to switch and modify models as needed. Running multiple models on the same dataset is easy, as choosing a preexisting data labeler to train and predict on takes just a few lines of code:

Maybe you want only a specific part of the labeler altered. Want to process the data in a unique way? Change one or both of the processes. Want new labels to classify? Change the model.

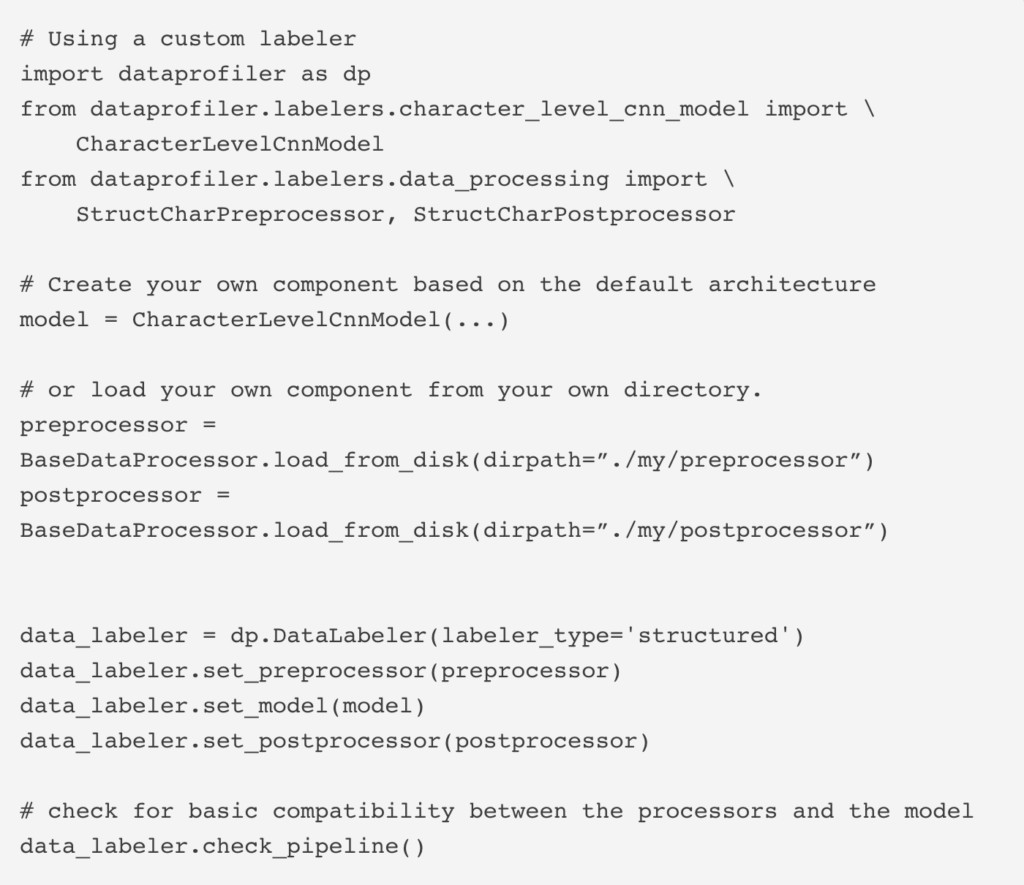

The process is streamlined like so:

This makes it easy to switch out any type of model or processor. Perhaps you need a CNN or an RNN or a Regex model to label with–all are possible. A model or processor can be created from the default architecture or loaded from an existing model or processor.

Creating your own data labeler pipeline from scratch

Whether it is the CharacterLevelCnnModel or the RegexModel, perhaps you need more than the default models. How can you incorporate your own model?

The data labeler has been designed so that users can easily build their own from scratch with the framework provided. By inheriting the abstract base classes for the model, preprocessor, and postprocessor, you can create the individual components of the pipeline and have them seamlessly work with the existing architecture. To learn specifically how to implement your own data labeler pipeline, you can read more here! There are a few critical methods to implement, but beyond this, there is no limit to the type of model or processor you can create. This can be extremely useful for when you need to run many different and custom pipelines.

Conclusion

With the Data Profiler, you’ll be able to learn what’s in your data. The ability to create and switch out your own data labeler allows the Data Profiler to directly fit your needs. And since Data Profiler is an open source solution for gaining insights into large data, if there are features you want to see added, make a GitHub issue or create a pull request on the repository. Contributors are more than welcome on this open source project.

Grant Eden, Software Developer

MS in Computer Science and Engineering from Penn State. Software Developer at Capital One performing research in Machine Learning. Skilled in Object Detection and Natural Language Processing. Really good at baking apple pies.

DISCLOSURE STATEMENT: © 2021 Capital One. Opinions are those of the individual author. Unless noted otherwise in this post, Capital One is not affiliated with, nor endorsed by, any of the companies mentioned. All trademarks and other intellectual property used or displayed are property of their respective owners.

The Featured Blog Posts series will highlight posts from partners and members of the All Things Open community leading up to the conference in October.